Redis Enterprise has been benchmarked to demonstrate true linear scaling—going from 10M ops/sec with 6 AWS EC2 instances to 30M ops/sec with 18 AWS EC2 instances. If a Redis server reaches the point where it has more reads/writes than it can sustain, there are multiple ways to scale your database:

The re-sharding mechanism of Redis Enterprise is based on Redis replication. Whenever a shard needs to be scaled out, the Redis Enterprise cluster launches another Redis instance and replicates to it half of the hash slots of the original shard. Once the new shard is populated, traffic is sent to both shards by the proxy in a manner completely transparent to the app.

There are two ways to scale database shards:

2. Scaling out by adding node(s) to the Redis Enterprise cluster, rebalancing and then resharding your database. This scenario is useful if more physical resources are needed in your cluster in order to scale your database:

By default, every database created over the Redis Enterprise cluster is operated with a single redundant proxy. Although the proxy is extremely efficient and can usually handle more than 1 million ops/sec when running on a modest cloud instance, there are some situations in which scaling the proxy is needed to deal with network bandwidth or packets-per-second limitations. Redis Enterprise allows you to scale the proxy across multiple nodes of the cluster without changing your app code. Redis clients can use a DNS round robin to select which proxy to work with every time a new connection is created.

Furthermore, in order to optimize network traffic across the cluster nodes, Redis Enterprise selects a shard placement policy according to the proxy configuration. When a single proxy configuration is used, Redis Enterprise will try to put as many shards as it can on each node (this is also called a dense configuration). When a multi-proxy configuration is used, the shard placement policy for the cluster will be based on a sparse configuration.

Redis Enterprise supports the open source (OSS) cluster API to allow only one network hop between your client and the cluster, at any cluster size. This way, your clients are exposed to the actual placement of the shards on the cluster node using the OSS cluster protocol, as explained below:

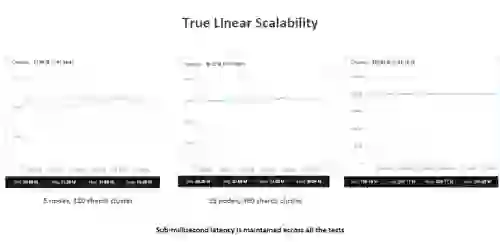

When working with the OSS cluster API, Redis Enterprise is scaled in a true linear manner. The figure below summarizes the results of a series of benchmarks:

Redis Enterprise allows you to scale your read operations by creating another database (on the same cluster) that will be served as a read-replica of your original database using the ‘replica-of’ feature. Your read-replica is treated as a completely different database, and can be configured with a different number of shards and different availability or durability characteristics. You can create multiple read-replicas or just increase the number of shards in an existing read-replica in order to further scale your read operations.

Next section ► Highly Available Redis